Connect to AI Coding Agent with Context7 MCP

Today, developers are increasingly using large language models (LLM) to explore product documentation needed to develop their solutions. This is truly convenient and helps save significant time compared to a manual approach.

The main problem with using LLM is outdated data – we do not know how long ago the information was last updated. But there is a solution: use MCP to constantly update and standardize interactions with both information sources and the chosen LLM.

For user convenience, Wordize allows to explore documentation through Context7 MCP.

Add Wordize Documentation into your LLM’s Context

To make Wordize available directly in your AI environment, you can connect it via the Context7 MCP, a framework that embeds documentation, APIs, and examples into the LLM working memory. This integration allows you to ask your AI tools context‑aware, actionable questions and receive the most accurate answers.

To enable access to Wordize documentation for your AI agent:

- Generate an API key to authenticate requests to the Context7 API while using the MCP server

- Install and configure MCP for your development environment by following the instructions

The following code example shows how to connect to Context7 via an API key:

"context7": {

"type": "http",

"url": "https://mcp.context7.com/mcp",

"headers": {

"CONTEXT7_API_KEY": "YOUR_API_KEY"

}

}

Use Wordize Documentation Inside Your AI Agent with Context7 MCP

Once Context7 MCP is connected, your AI agent can answer contextual requests – your model will fetch the right examples, methods, and syntax directly from Wordize docs.

Try prompting it with the following:

It is recommended to explicitly mention /websites/wordize and /websites/reference_wordize_net in your prompt to restrict the context used for retrieving information.

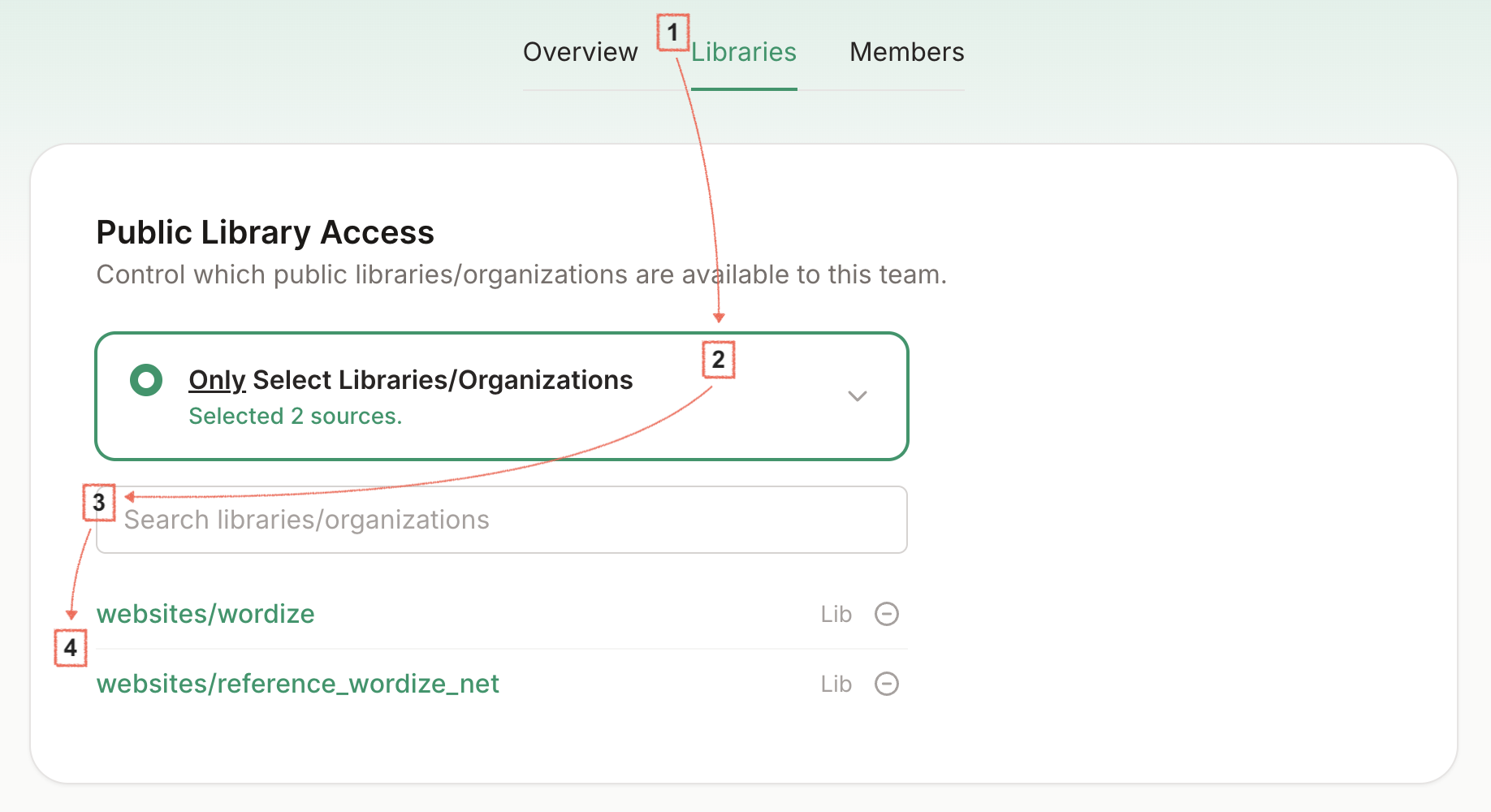

To avoid specifying libraries in the prompt each time, go to the Libraries tab, select “Only Select Libraries/Organizations”, and use the search bar to specify the Wordize libraries only:

/websites/wordize/websites/reference_wordize_net

Why Use Context7 MCP with Wordize

Context7 enhances LLM’s access to documentation. Instead of pasting docs or searching manually, your AI agent can now query structured Wordize documentation as context.

You get the following benefits:

- Faster answers: LLMs instantly get information from the official Wordize documentation

- Contextual understanding: your AI‑assistant understands API references, SDK syntax, and examples

- Always up‑to‑date: the MCP connector automatically syncs with Wordize documentation sources

- Language independence: works with any LLM (ChatGPT, Claude, local models, etc.) that supports MCP integration

By integrating Wordize Documentation with Context7 MCP, developers can add up‑to‑date API references and code examples directly to their AI programming environment.

This simplifies learning, experimenting, and coding with the guidance of your AI assistant.

FAQ

Q: How do I obtain a Context7 API key?

A: Sign in to the Context7 dashboard at https://context7.com/dashboard, navigate to the API Keys section, and click Create New Key. Copy the generated key and store it securely; you will use it in the MCP configuration headerCONTEXT7_API_KEY.Q: What should the MCP configuration look like to connect Wordize documentation?

A: The MCP JSON must specify thetypeashttp, theurlashttps://mcp.context7.com/mcp, and include aheadersobject containing yourCONTEXT7_API_KEY. See the code snippet in the Add Wordize Documentation section for a minimal example.Q: Do I need to mention the library IDs in every prompt?

A: It is recommended but not mandatory. Including/websites/wordizeand/websites/reference_wordize_netin the prompt narrows the search to Wordize content. To avoid repeating them, configure the Libraries tab in the MCP UI to select only those libraries for the session.Q: Can this integration be used with any LLM provider?

A: Yes. Context7 MCP is LLM‑agnostic; as long as the model can make HTTP calls to the MCP endpoint and process the returned context, it will work with OpenAI, Anthropic, Claude, local models, etc.Q: I receive a 401 Unauthorized error when the agent tries to fetch documentation. What should I check?

A: Verify that theCONTEXT7_API_KEYheader contains a valid, active key and that there are no extra spaces or line‑break characters. Also ensure the key has permission to access the Wordize libraries in the Context7 portal. Regenerating the key often resolves the issue.